How To Access All AI Models In One Place

Access All AI Models in One Place

As the range of AI models expands, selecting the right one for your daily tasks can be overwhelming.

When I started with AI, I faced the problem of too many providers, each requiring a separate subscription. It cost me a small fortune. I had to enter my data everywhere, and transparency was minimal.

“Which AI model should you use for your work?” Do you go with GPT-4.1 for blog optimization or Claude 4 Sonnet for affordable, seamless coding? Will you subscribe to both ChatGPT Plus and Claude AI Pro just to use those two models at the same time?

The answer is NO. Why complicate things with multiple subscriptions when you can get access to all these powerful AI models in one place? Not just GPT-4.1 and Claude 4 Sonnet, but a whole suite of the latest and most advanced AI models such as Perplexity or Gemini Pro – all accessible through a single, unified platform.

Ready to explore how? Let’s dive in!

What are AI Models (LLMs)?

AI models, specifically Large Language Models (LLMs), are advanced artificial intelligence systems trained on vast amounts of text data to understand, generate, and manipulate human language.

Using deep learning and transformer architectures, they can predict word sequences, answer questions, write content, and solve complex tasks.

Now, most popular LLMs have multimodal capabilities, meaning they can process and generate not just text but also other forms of input and output, such as images, audio, and video.

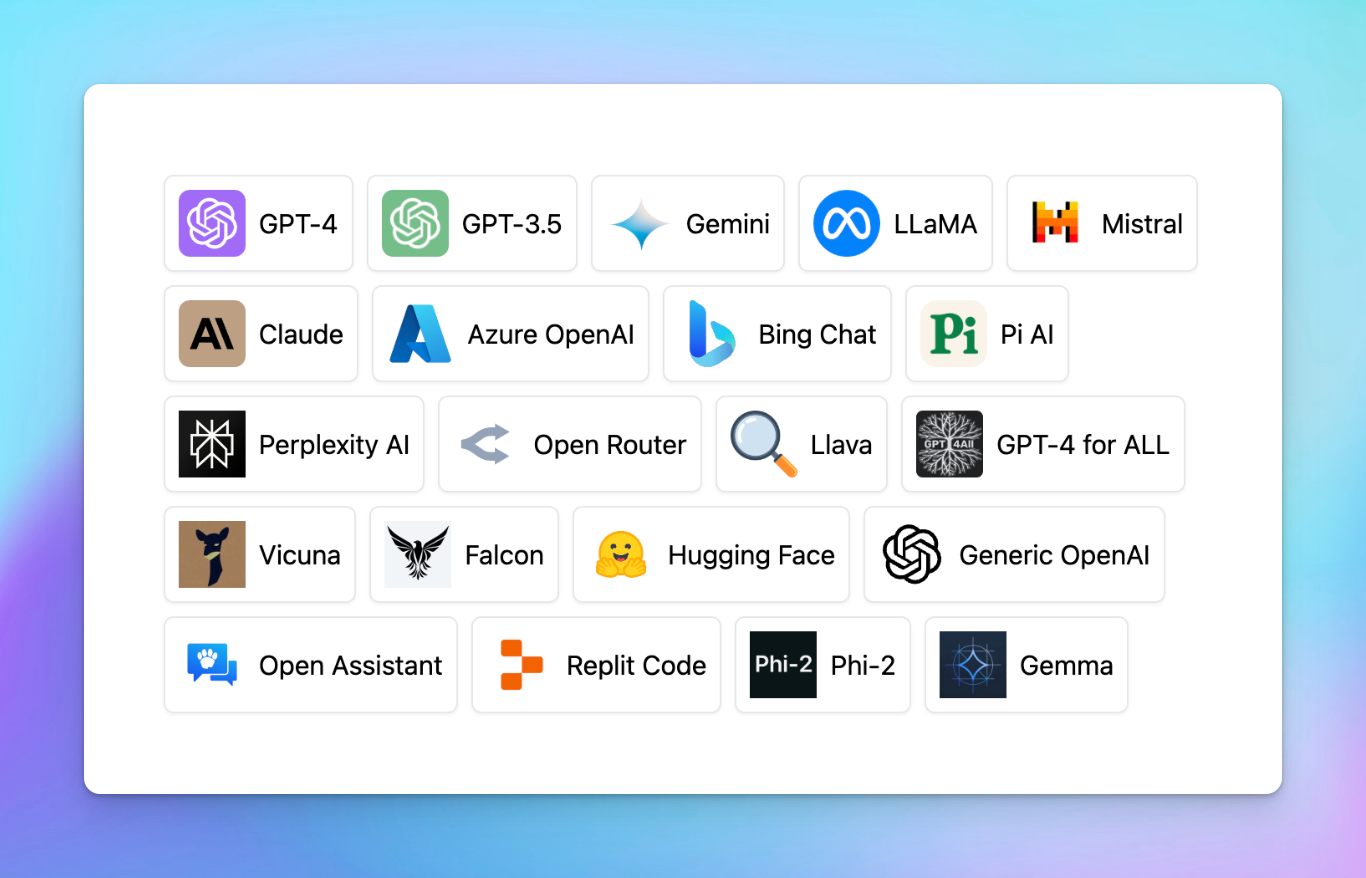

Some example LLMs

Popular AI Models On The Market

There are many AI models that can help you with your tasks:

Developer

Example AI models

OpenAI

- GPT-4o, GPT-4.1

- Gemini 2.5 Pro, Gemini 2.0 Flash

Anthropic

- Claude 4 Sonnet, Claude 3 Opus

Microsoft Azure

- GPT-4o, GPT-4 Turbo, GPT-4.x

Meta

- Llama 4, LLama 3, Code Llama

- Gemma 3, Gemma 2

Microsoft

- Phi-4, Phi-3 Medium

Mistral AI

- Mistral 7B, Mixtral 8x7B

Cohere

- Command R+, Command R

DeepSeek

- DeepSeek Coder V2, DeepSeek V3 Chat

Why Accessing Multiple AI Models?

As these AI models performance and cost will vary, some are great for coding, while others excel at content creation. Having access to multiple models allows you to compare their outputs and cost to find the best one for your needs.

But there are some challenges with this:

- Higher cost: use multiple AI models can quickly become expensive, as each platform requires its own subscription. For instance, if you want to use both ChatGPT and Claude, you’d be looking at around $40 per month – not exactly the most cost-efficient approach, right?

- Security and privacy concerns: use different platforms may raise concerns about how your data is handled. Each provider may have different rules, making it harder to ensure your personal data stays safe.

Those challenges can be easily resolved with Gnoppix AI – an all-in-one AI platform that allows you to access all AI models in one place – with cost efficiency and better privacy protection!

Use to Access All AI Models In One Place

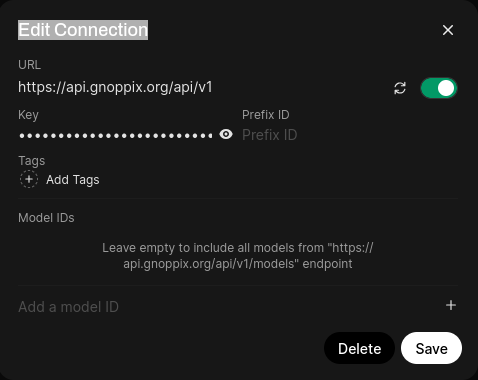

Gnoppix AI is an all-in-one AI platform that helps you interact with multiple Large Language Models (LLMs) such as GPT-4.1, Claude 4 Sonnet, Gemini 2.5 Pro, and many other open-source AI models that you can tell through API!

Gnoppix AI allows you to:

- Connect with as many AI models as you want using one API key, from the popular AI models to the open-source or local models.

- Switch back and forth among different AI models to compare results and find what works best for you.

- Combine the AI models with plugins to access the internet, generate images, and implement RAG.

- Train the AI models with your custom training data.

- Local and Remote RAG Integration

- Document Extraction: Extract text and data from various document formats including PDFs, Word documents, Excel spreadsheets, PowerPoint presentations, and more.

- Web Search for RAG: You can perform web searches using a selection of various search providers and inject the results directly into your local Retrieval Augmented Generation (RAG) experience.

- Full Markdown and LaTeX Support: Elevate your LLM experience with comprehensive Markdown, LaTex, and Rich Text capabilities for enriched interaction.

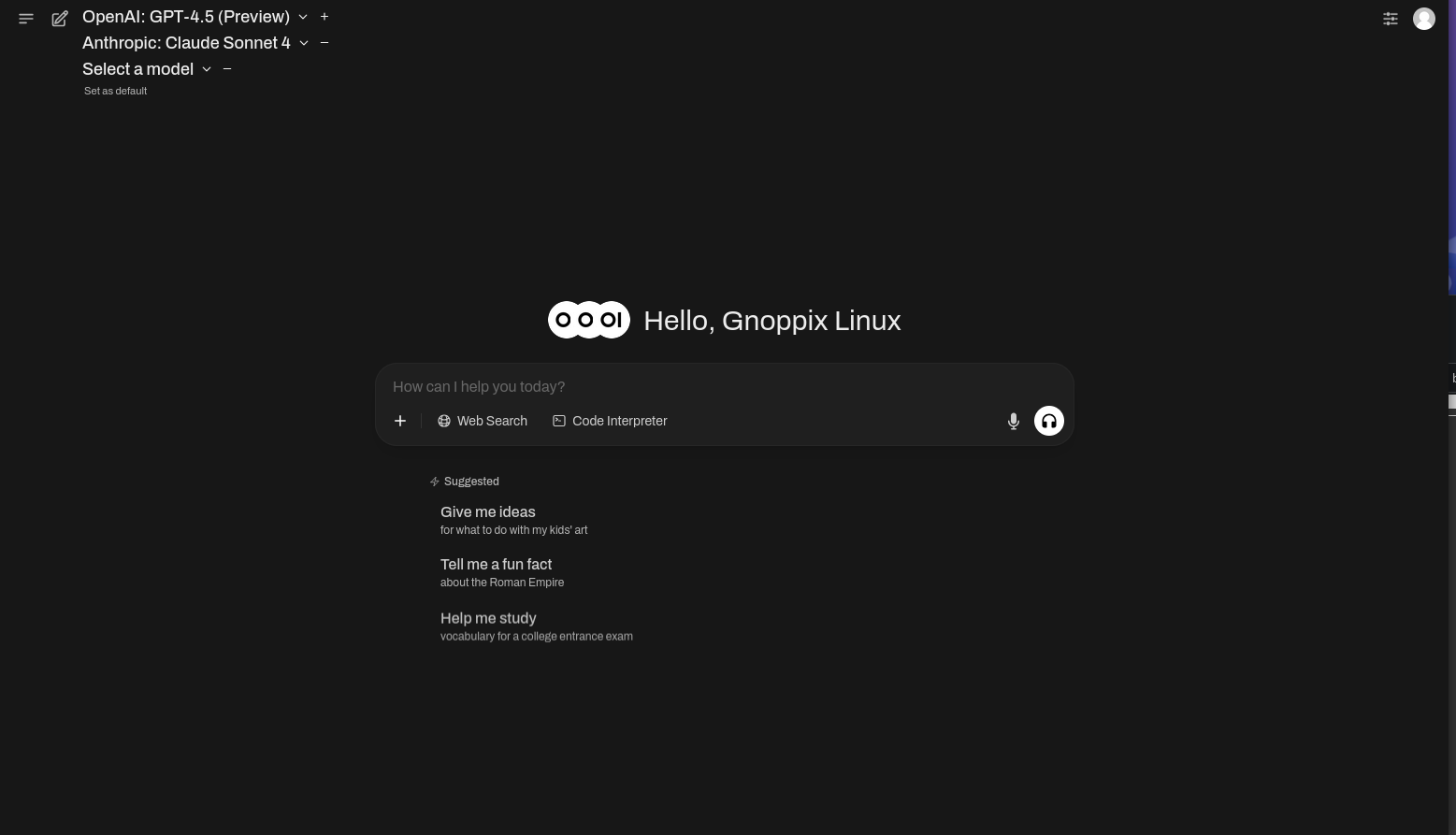

- Concurrent Model Utilization: Effortlessly engage with multiple models simultaneously, harnessing their unique strengths for optimal responses. Leverage a diverse set of model modalities in parallel to enhance your experience.

- Intuitive Interface: The chat interface has been designed with the user in mind, drawing inspiration from the user interface of ChatGPT.

- Swift Responsiveness: Enjoy reliably fast and responsive performance.

- Splash Screen: A simple loading splash screen for a smoother user experience.

- Personalized Interface: Choose between a freshly designed search landing page and the classic chat UI from Settings > Interface, allowing for a tailored experience.

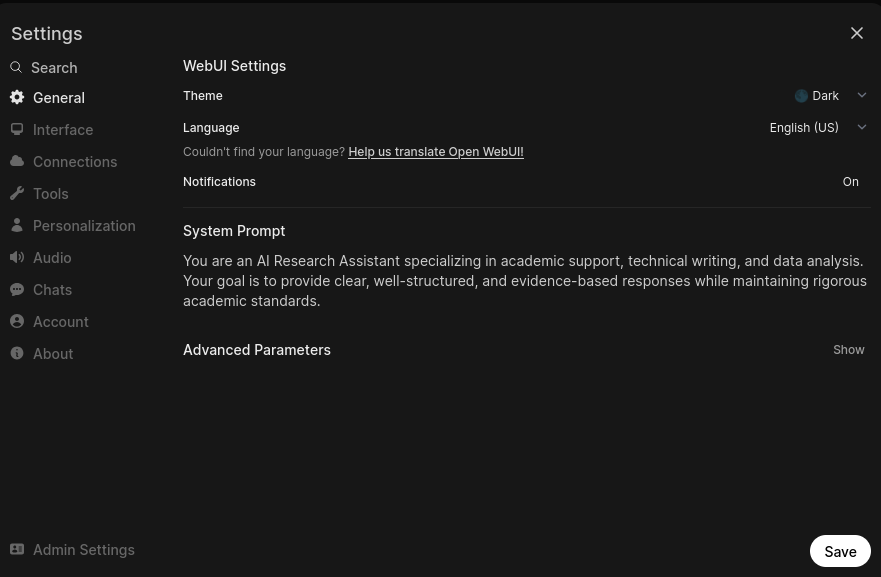

- Theme Customization: Personalize your Open WebUI experience with a range of options, including a variety of solid, yet sleek themes, customizable chat background images, and three mode options: Light, Dark, or OLED Dark mode - or let Her choose for you!

- Custom Background Support: Set a custom background from Settings > Interface to personalize your experience.

- Rich Banners with Markdown: Create visually engaging announcements with markdown support in banners, enabling richer and more dynamic content.

- Code Syntax Highlighting: Our syntax highlighting feature enhances code readability, providing a clear and concise view of your code.

- Markdown Rendering in User Messages: User messages are now rendered in Markdown, enhancing readability and interaction.

- Flexible Text Input Options: Switch between rich text input and legacy text area input for chat, catering to user preferences and providing a choice between advanced formatting and simpler text input.

- Effortless Code Sharing: Streamline the sharing and collaboration process with convenient code copying options, including a floating copy button in code blocks and click-to-copy functionality from code spans, saving time and reducing frustration.

- Interactive Artifacts: Render web content and SVGs directly in the interface, supporting quick iterations and live changes for enhanced creativity and productivity.

- Live Code Editing: Supercharged code blocks allow live editing directly in the LLM response, with live reloads supported by artifacts, streamlining coding and testing.

- Enhanced SVG Interaction: Pan and zoom capabilities for SVG images, including Mermaid diagrams, enable deeper exploration and understanding of complex concepts.

- Text Select Quick Actions: Floating buttons appear when text is highlighted in LLM responses, offering deeper interactions like “Ask a Question” or “Explain”, and enhancing overall user experience.

- Bi-Directional Chat Support: You can easily switch between left-to-right and right-to-left chat directions to accommodate various language preferences.

- Mobile Accessibility: The sidebar can be opened and closed on mobile devices with a simple swipe gesture.

- Haptic Feedback on Supported Devices: Android devices support haptic feedback for an immersive tactile experience during certain interactions.

- User Settings Search: Quickly search for settings fields, improving ease of use and navigation.

- Pinned Chats: Support for pinned chats, allowing you to keep important conversations easily accessible.

- Chat Completion Notifications: Stay updated with instant in-UI notifications when a chat finishes in a non-active tab, ensuring you never miss a completed response.

- YouTube RAG Pipeline: The dedicated Retrieval Augmented Generation (RAG) pipeline for summarizing YouTube videos via video URLs enables smooth interaction with video transcriptions directly.

- Auto-Tagging: Conversations can optionally be automatically tagged for improved organization, mirroring the efficiency of auto-generated titles.

- Fine-Tuned Control with Advanced Parameters: Gain a deeper level of control by adjusting model parameters such as seed, temperature, frequency penalty, context length, seed, and more.

- RLHF Annotation: Enhance the impact of your messages by rating them with either a thumbs up or thumbs down

- Voice Input Support: Engage with your model through voice interactions; enjoy the convenience of talking to your model directly. Additionally, explore the option for sending voice input automatically after 3 seconds of silence for a streamlined experience.

- Hands-Free Voice Call Feature: Initiate voice calls without needing to use your hands, making interactions more seamless.

- Video Call Feature: Enable video calls with supported vision models like LlaVA and GPT-4o, adding a visual dimension to your communications.

- ** Versatile, UI-Agnostic, OpenAI-Compatible Plugin Framework:** Seamlessly integrate and customize Open WebUI Pipelines for efficient data processing and model training, ensuring ultimate flexibility and scalability.

- Python Code Execution: Execute Python code locally in the browser via Pyodide with a range of libraries supported by Pyodide.

- Mermaid Rendering: Create visually appealing diagrams and flowcharts

- And much much more …

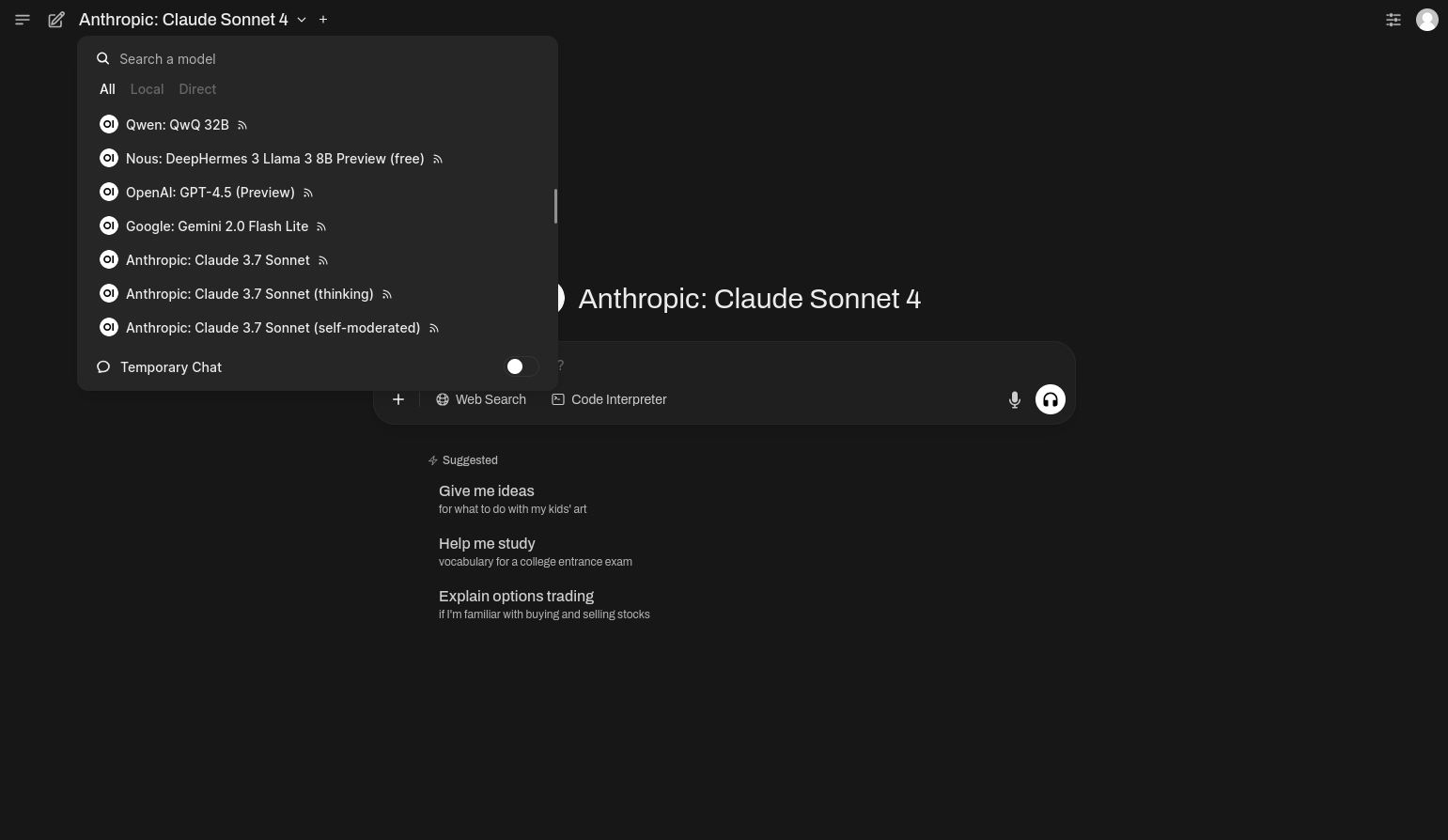

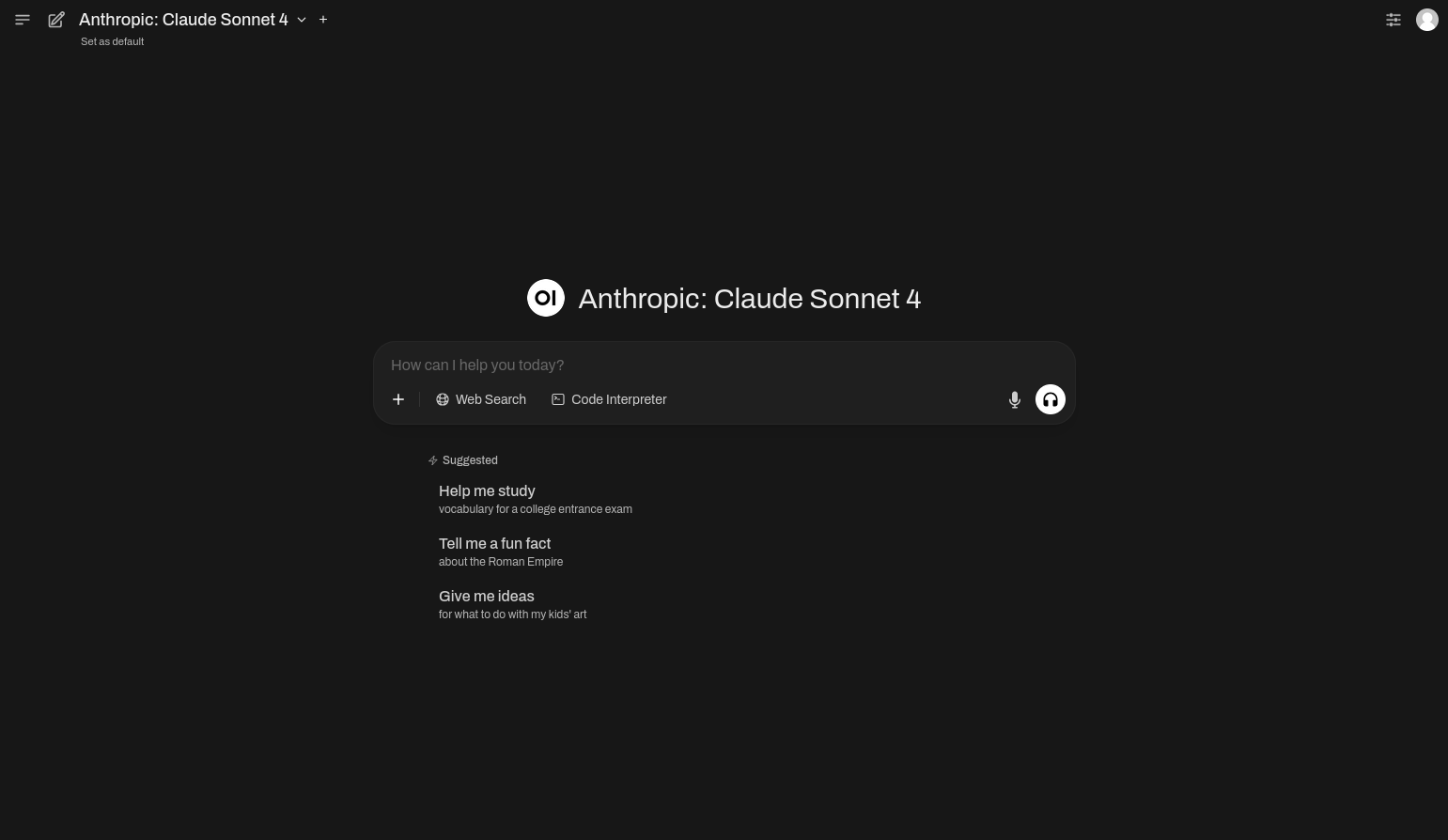

Access Multiple AI Models on Gnoppix

Gnoppix Chat Interface

Why should you choose Gnoppix AI

Here are some of the main advantages of using Gnoppix AI to access all AI models:

1. Easily find the right models for your use cases

By accessing multiple AI models in one platform, you can easily compare outputs by switch back and forth between AI models.

This helps you identify the best model for your specific needs, whether you’re working on content generation, coding, or any other task.

More than that, you can fine-tune your workflow and choose the model that delivers the most accurate results at the optimal cost.

Switch AI models mid-conversation

2. Optimize cost

No need to subscribe to the monthly cost from different AI Providers like ChatGPT Plus or Claude AI Pro. You are now using the AI models via API and only pay for what you use – which means you’re only charged based on the number of tokens (words or characters) processed by the model.

Use with your own API key

Define your own System Prompt

This is particularly beneficial for low-volume users who may not use AI regularly. Instead of paying for a subscription you might not fully utilize, the pay-per-usage model ensures you’re only charged when you engage with the models, which saves you money on idle time.

UI Personalisation

3. Intuitive chat interface with customizable experience

Gnoppix AI is the best chat interface for accessing multiple AI models on the market now.

“This is the best ChatGPT client and I tested so many! So much better than the OpenAI user interface.”

Mirel Vasile – Co-founder @Nextasee and @Anticipa

Gnoppix AI chat interface stands out due to:

- Its customizable settings for all AI models, allowing users to adjust AI model configurations and parameters

- Search chat histories, and add chats into folders.

- Offer a distraction-free experience with options for light and dark modes, keyboard shortcuts

- Build your own prompt library

- Develop AI Agent collection with custom training data to help you on specific tasks

- Use Voice input and Text-to-speech

Gnoppix AI Chat Interface with AI Agent

4. Private and Secure

Gnoppix AI prioritizes privacy and security. We are proud to be SOC 2 Certified and GDPR Compliant, which ensures that your data is handled with the highest standards.

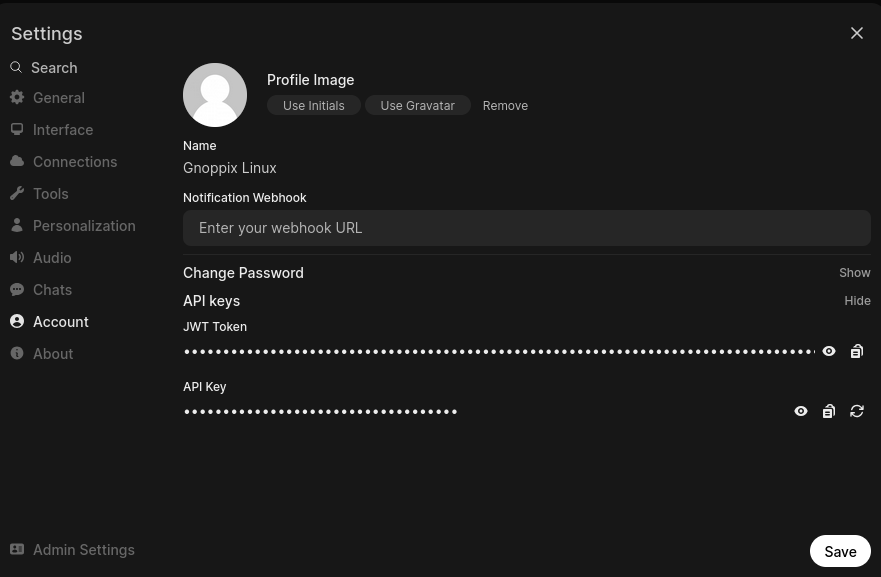

With Gnoppix AI, you use your own API keys to access AI models, and rest assured, none of your data – including chat history, AI agents, or prompts – will be used for model training or shared externally.

Read more on our Privacy Policy.

5. Continuous updates with new AI models

With the rapid development of AI, the Gnoppix AI team is dedicated to delivering timely updates to ensure your access to the latest AI models and features. This commitment helps users stay ahead in the competitive market.

We deliver new updates almost every week, check out our AI Forum